Data Science: Karas Cheat Sheet Karas Cheat Sheet. Keras is an open-source neural-network library written in Python. It is capable of running on top of TensorFlow. Keras is our recommended library for deep learning in Python, especially for beginners. Its minimalist, modular approach makes it a breeze to get deep neural networks up and running. To see the most up-to-date full tutorial, as well as installation instructions, visit the online tutorial at elitedatascience.com.

By Karlijn Willems, DataCamp.

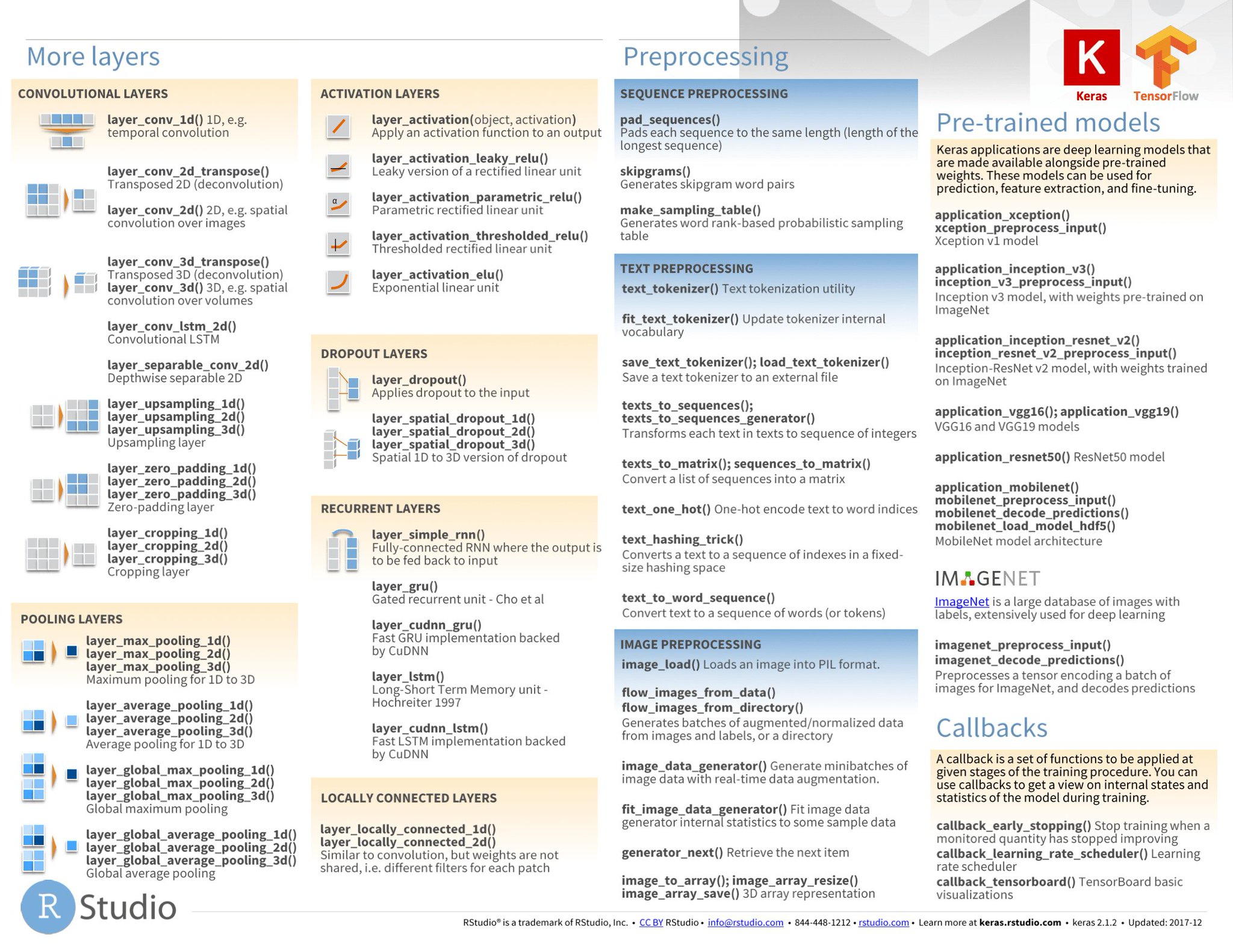

Over the past few months, I have been collecting AI cheat sheets. From time to time I share them with friends and colleagues and recently I have been getting asked a lot, so I decided to organize and share the entire collection. To make things more interesting and give context, I added descriptions and/or excerpts for each major topic. This cheat sheet is an easy way to get up to speed on TensorFlow. We'll update this guide periodically when news and updates about TensorFlow are released. The tf.keras.optimizers.Optimizer. But the idea of R joining forces with Python to implement a keras package is a welcome addition and one I wanted to try. I went through the R-Studio cheat sheet on keras and decided to make a go. Straight to GTS Mode. Things went smoothly until I got to actually building and running the keras model. I was immediately faced with a long list of.

Deep Learning With Python

Deep learning is a very exciting subfield of machine learning that is a set of algorithms, inspired by the structure and function of the brain. These algorithms are usually called Artificial Neural Networks (ANN). Deep learning is one of the hottest fields in data science with many case studies with marvelous results in robotics, image recognition and Artificial Intelligence (AI).

This undoubtedly sounds very exciting (and it is!), but it is definitely one of the more complex topics in data science to get into. If you have prior machine learning experience, though, you should be getting started with deep learning pretty easily, as you will have already proven that you have understood, practiced and assimilated the necessary mathematics, statistics and machine learning basics. Maybe you have already worked on machine learning projects or you have even participated in a Kaggle or DrivenData competition!

However, even with this prior experience, you’ll still find that this complex topic is interestingly challenging! This doesn’t need to mean that you shouldn’t dive in any code straight away - You can also get a high-level idea how deep learning techniques work by using, for example, the Keras package. This package is ideal for beginners, as it offers you a high-level neural networks API with which you can develop and evaluate deep learning models easily and quickly.

Nevertheless, doubts may always arise and when they do, take a look at DataCamp’s, Keras tutorial or download the cheat sheet for free!

In what follows, we’ll dive deeper into the structure and the contents of the cheat sheet.

Keras Cheat Sheet

Starting with Keras is not too hard if you take into account that there are some steps that you need to go through: gathering your data, preprocessing it, constructing your model, compiling and fitting your model, evaluating the model’s performance, making predictions and fine-tuning the model.

This might seem quite abstract. Let’s take a quick look at an example.

A very basic example in which the Keras library is used is to make a simple neural network with just one input and one output layer. To be able to build up your model, you need to import two modules from the Keras package: Sequential and Dense.

Next, you need some data. This example makes use of the random module of NumPy, the fundamental package for scientific computing in Python, to quickly generate some data and labels for you. That’s why you also import the numpy package with the conventional alias np. With the functions from the random module, you’ll first construct an array with size (1000,100). Next, you’ll also construct a labels array that consists of zeroes and ones and is of size (1000,1).

With the data at hand, you can start constructing your neural network architecture. A quick way to get started is to use the Keras Sequential model: it’s a linear stack of layers. You can easily create the model by passing a list of layer instances to the constructor, which you set up by running model = Sequential(). After that, you first add an input layer to the model with the add() function. You pick a dense or fully connected layer, where you indicate that you’re dealing with an input layer by using the argument input_dim. You also use one of the most common activation functions here -relu- and you pick 32 units for the input layer of your model. Next, you also add another dense layer as an output layer. It’s of size 1 with a sigmoid activation function to calculate the probabilities.

With the model built up, you can compile it with the help of the compile() function. you configure the model with the rmsprop optimizer and the binary_crossentropy loss function. Additionally, you can also monitor the accuracy during the training by passing ['accuracy'] to the metrics argument.

Next, you fit the model to the data with fit(): you pass in the data, the labels, set the number of epochs and the batch size. Lastly, you can finally start making predictions with the help of the predict() function. Just pass in the data!

Simple enough, right? Let’s take a look at all these steps in more detail.

Data

As you might have gathered from the short example that was just covered in the first section, your data needs to be stored as a NumPy array or as a list of NumPy arrays in order to get started. Also, ideally, you split the data into training and test sets, which is something that was neglected in the example above. In such cases, you can resort to the train_test_split() function which you can find in the cross_validation module of Scikit-Learn, the library for machine learning in Python.

If you want to work with the data sets that come with the Keras library, you can easily do so by importing them from the datasets module. You can use the load_data() functions to get the data split in training and test sets, into your workspace. Alternatively, you can also use the urllib library and its request module to open and read URLs.

Preprocessing

Now that you have the data, you can easily proceed to preprocessing it. Of course, depending on your data, you’ll need to resort to different functions to make sure that the data looks exactly the way it needs to look to pass it to the neural network model.

For example, you can use sequence padding with pad_sequences() to ensure that all sequences in a list have the same length, or you can use one-hot encoding with to_categorical() to generate one boolean column for each categorical feature. These functions come with the Keras library.

However, as mentioned before, you will most probably also need to resort to other libraries for preprocessing - Think of the train and test set splits, or the standardization/normalization functions that come with the Scikit-Learn library. If you’d like to know more, take a look at the scikit-learn documentation or DataCamp’s scikit-learn cheat sheet.

Model Architecture

With your preprocessed data, you can start making your model. As you saw in the basic example above, you first start off by using the Sequential model. Then, you can get down to the real work and add layers to your model!

Keras Cheat Sheet 2020

Sequential Model

Import Sequential from keras.models and initialize your model by assigning the Sequential() constructor to model. For this cheat sheet, we’ll be working with three examples of models: the Multilayer Perceptron (MLP) for binary and multi-class classification and regression, the Convolutional Neural Network (CNN) and the Recurrent Neural Network (RNN).

Multilayer Perceptron (MLP)

Networks of perceptrons are multi-layer perceptrons, which are also known as “feed-forward neural networks”. As you sort of guessed, these are more complex networks than the perceptron, as they consist of multiple neurons that are organized in layers. The number of layers is usually limited to two or three, but theoretically, there is no limit!

Binary Classification

First up is the MLP model for binary classification. In this case, you’ll make a model to correctly predict whether Pima indians have an onset of diabetes within five years or not.

To do this, you first import Dense from keras.layers and you can get started with building up your neural network architecture. Just like in the example that was given at the start of this post, you first need to make an input layer. Since the model needs to know what input shape to expect, you’ll always find the input_shape, input_dim, input_length, or batch_size arguments in the input layer.

Multi-Class Classification

Next up, you also build a multi-class classification model for the MNIST data set to correctly recognize handwritten digits. In this model, you’ll not only use Dense layers, but also Dropout layers. The function of the dropout layers is to ignore randomly selected neurons during training, thus reducing the chances of overfitting.

As you saw in the first model, you also pass the input_shape for the input layer and you also fill in the activation argument for all Dense layers. You set the dropout rate at 0.2 for the Dropout layers.

Regression

A classic data set for regression is the Boston housing data set. In this case, you build a simple model with just an input and an output layer. Once again, the Dense layer is used, to which you pass the units, the activation function and the input dimensions. In the output layer, you specify that you want to have one unit back.

Convolutional Neural Network (CNN)

A convolutional Neural Network is a type of deep, feed-forward artificial neural network that has successfully been applied to analyzing visual imagery. In this case, the neural network model that is built in the cheat sheet for the CIFAR10 data set, which is well known and used for object recognition.

In this case, you see that there are some other modules that are imported in order to build your CNN model - Activation, Conv2D, MaxPooling2D, and Flatten. These types of layers, in combination with the ones that you have already seen, will be combined in such a way that you can classify the CIFAR10 images.

Note that you can find the complete example back in the examples folder of the Keras repository.

Recurrent Neural Network (RNN)

A Recurrent Neural Network is the last type of network that is included in the cheat sheet: it’s a popular model that has shown good results in NLP tasks. They’re not really like feed-forward networks, : the network is one where connections between units form a directed cycle. For this cheat sheet, the model that was included is one for the IMDB data set. The task is sentiment classification.

This last example uses the Embedding and LSTM layers; With the Embedding layer, you can map each movie review into a real vector domain. You can then pass in the output of the Embedding layer straight in the LSTM layer. Lastly, make sure to add an output layer with only 1 unit and an activation function (in this case, the sigmoid activation function is used).

PS. if you want to know more about neural network architectures, definitely check out this mostly complete chart of neural networks. Also, if you’d like to know more on constructing neural network models with Keras, check out DataCamp’s Keras course.

By Afshine Amidi and Shervine Amidi

Overview

Architecture of a traditional CNN Convolutional neural networks, also known as CNNs, are a specific type of neural networks that are generally composed of the following layers:

The convolution layer and the pooling layer can be fine-tuned with respect to hyperparameters that are described in the next sections.

Types of layer

Convolution layer (CONV) The convolution layer (CONV) uses filters that perform convolution operations as it is scanning the input $I$ with respect to its dimensions. Its hyperparameters include the filter size $F$ and stride $S$. The resulting output $O$ is called feature map or activation map.

Remark: the convolution step can be generalized to the 1D and 3D cases as well.

Pooling (POOL) The pooling layer (POOL) is a downsampling operation, typically applied after a convolution layer, which does some spatial invariance. In particular, max and average pooling are special kinds of pooling where the maximum and average value is taken, respectively.

| Type | Max pooling | Average pooling |

| Purpose | Each pooling operation selects the maximum value of the current view | Each pooling operation averages the values of the current view |

| Illustration | ||

| Comments | • Preserves detected features • Most commonly used | • Downsamples feature map • Used in LeNet |

Fully Connected (FC) The fully connected layer (FC) operates on a flattened input where each input is connected to all neurons. If present, FC layers are usually found towards the end of CNN architectures and can be used to optimize objectives such as class scores.

Filter hyperparameters

The convolution layer contains filters for which it is important to know the meaning behind its hyperparameters.

Dimensions of a filter A filter of size $Ftimes F$ applied to an input containing $C$ channels is a $F times F times C$ volume that performs convolutions on an input of size $I times I times C$ and produces an output feature map (also called activation map) of size $O times O times 1$.

Remark: the application of $K$ filters of size $Ftimes F$ results in an output feature map of size $O times O times K$.

Stride For a convolutional or a pooling operation, the stride $S$ denotes the number of pixels by which the window moves after each operation.

Zero-padding Zero-padding denotes the process of adding $P$ zeroes to each side of the boundaries of the input. This value can either be manually specified or automatically set through one of the three modes detailed below:

| Mode | Valid | Same | Full |

| Value | $P = 0$ | $P_text{start} = Bigllfloorfrac{S lceilfrac{I}{S}rceil - I + F - S}{2}Bigrrfloor$ $P_text{end} = Bigllceilfrac{S lceilfrac{I}{S}rceil - I + F - S}{2}Bigrrceil$ | $P_text{start}in[![0,F-1]!]$ $P_text{end} = F-1$ |

| Illustration | |||

| Purpose | • No padding • Drops last convolution if dimensions do not match | • Padding such that feature map size has size $Bigllceilfrac{I}{S}Bigrrceil$ • Output size is mathematically convenient • Also called 'half' padding | • Maximum padding such that end convolutions are applied on the limits of the input • Filter 'sees' the input end-to-end |

Tuning hyperparameters

Parameter compatibility in convolution layer By noting $I$ the length of the input volume size, $F$ the length of the filter, $P$ the amount of zero padding, $S$ the stride, then the output size $O$ of the feature map along that dimension is given by:

Remark: often times, $P_text{start} = P_text{end} triangleq P$, in which case we can replace $P_text{start} + P_text{end}$ by $2P$ in the formula above.

Understanding the complexity of the model In order to assess the complexity of a model, it is often useful to determine the number of parameters that its architecture will have. In a given layer of a convolutional neural network, it is done as follows:

| CONV | POOL | FC | |

| Illustration | |||

| Input size | $I times I times C$ | $I times I times C$ | $N_{text{in}}$ |

| Output size | $O times O times K$ | $O times O times C$ | $N_{text{out}}$ |

| Number of parameters | $(F times F times C + 1) cdot K$ | $0$ | $(N_{text{in}} + 1 ) times N_{text{out}}$ |

| Remarks | • One bias parameter per filter • In most cases, $S < F$ • A common choice for $K$ is $2C$ | • Pooling operation done channel-wise • In most cases, $S = F$ | • Input is flattened • One bias parameter per neuron • The number of FC neurons is free of structural constraints |

Receptive field The receptive field at layer $k$ is the area denoted $R_k times R_k$ of the input that each pixel of the $k$-th activation map can 'see'.By calling $F_j$ the filter size of layer $j$ and $S_i$ the stride value of layer $i$ and with the convention $S_0 = 1$, the receptive field at layer $k$ can be computed with the formula:

In the example below, we have $F_1 = F_2 = 3$ and $S_1 = S_2 = 1$, which gives $R_2 = 1 + 2cdot 1 + 2cdot 1 = 5$.

Commonly used activation functions

Rectified Linear Unit The rectified linear unit layer (ReLU) is an activation function $g$ that is used on all elements of the volume. It aims at introducing non-linearities to the network. Its variants are summarized in the table below:

Keras R Cheat Sheet

| ReLU | Leaky ReLU | ELU |

| $g(z)=max(0,z)$ | $g(z)=max(epsilon z,z)$ with $epsilonll1$ | $g(z)=max(alpha(e^z-1),z)$ with $alphall1$ |

| • Non-linearity complexities biologically interpretable | • Addresses dying ReLU issue for negative values | • Differentiable everywhere |

Softmax The softmax step can be seen as a generalized logistic function that takes as input a vector of scores $xinmathbb{R}^n$ and outputs a vector of output probability $pinmathbb{R}^n$ through a softmax function at the end of the architecture. It is defined as follows:

Object detection

Types of models There are 3 main types of object recognition algorithms, for which the nature of what is predicted is different. They are described in the table below:

| Image classification | Classification w. localization | Detection |

| • Classifies a picture • Predicts probability of object | • Detects an object in a picture • Predicts probability of object and where it is located | • Detects up to several objects in a picture • Predicts probabilities of objects and where they are located |

| Traditional CNN | Simplified YOLO, R-CNN | YOLO, R-CNN |

Detection In the context of object detection, different methods are used depending on whether we just want to locate the object or detect a more complex shape in the image. The two main ones are summed up in the table below:

| Bounding box detection | Landmark detection |

| • Detects the part of the image where the object is located | • Detects a shape or characteristics of an object (e.g. eyes) • More granular |

| Box of center $(b_x,b_y)$, height $b_h$ and width $b_w$ | Reference points $(l_{1x},l_{1y}),$ $...,$ $(l_{nx},l_{ny})$ |

Intersection over Union Intersection over Union, also known as $textrm{IoU}$, is a function that quantifies how correctly positioned a predicted bounding box $B_p$ is over the actual bounding box $B_a$. It is defined as:

Remark: we always have $textrm{IoU}in[0,1]$. By convention, a predicted bounding box $B_p$ is considered as being reasonably good if $textrm{IoU}(B_p,B_a)geqslant0.5$.

Anchor boxes Anchor boxing is a technique used to predict overlapping bounding boxes. In practice, the network is allowed to predict more than one box simultaneously, where each box prediction is constrained to have a given set of geometrical properties. For instance, the first prediction can potentially be a rectangular box of a given form, while the second will be another rectangular box of a different geometrical form.

Non-max suppression The non-max suppression technique aims at removing duplicate overlapping bounding boxes of a same object by selecting the most representative ones. After having removed all boxes having a probability prediction lower than 0.6, the following steps are repeated while there are boxes remaining:

For a given class,

• Step 1: Pick the box with the largest prediction probability.

• Step 2: Discard any box having an $textrm{IoU}geqslant0.5$ with the previous box.

YOLO You Only Look Once (YOLO) is an object detection algorithm that performs the following steps:

• Step 1: Divide the input image into a $Gtimes G$ grid.

• Step 2: For each grid cell, run a CNN that predicts $y$ of the following form:

• Step 3: Run the non-max suppression algorithm to remove any potential duplicate overlapping bounding boxes.

Remark: when $p_c=0$, then the network does not detect any object. In that case, the corresponding predictions $b_x, ..., c_p$ have to be ignored.

R-CNN Region with Convolutional Neural Networks (R-CNN) is an object detection algorithm that first segments the image to find potential relevant bounding boxes and then run the detection algorithm to find most probable objects in those bounding boxes.

Remark: although the original algorithm is computationally expensive and slow, newer architectures enabled the algorithm to run faster, such as Fast R-CNN and Faster R-CNN.

Face verification and recognition

Types of models Two main types of model are summed up in table below:

| Face verification | Face recognition |

| • Is this the correct person? • One-to-one lookup | • Is this one of the $K$ persons in the database? • One-to-many lookup |

One Shot Learning One Shot Learning is a face verification algorithm that uses a limited training set to learn a similarity function that quantifies how different two given images are. The similarity function applied to two images is often noted $d(textrm{image 1}, textrm{image 2}).$

Siamese Network Siamese Networks aim at learning how to encode images to then quantify how different two images are. For a given input image $x^{(i)}$, the encoded output is often noted as $f(x^{(i)})$.

Triplet loss The triplet loss $ell$ is a loss function computed on the embedding representation of a triplet of images $A$ (anchor), $P$ (positive) and $N$ (negative). The anchor and the positive example belong to a same class, while the negative example to another one. By calling $alphainmathbb{R}^+$ the margin parameter, this loss is defined as follows:

Neural style transfer

Motivation The goal of neural style transfer is to generate an image $G$ based on a given content $C$ and a given style $S$.

Activation In a given layer $l$, the activation is noted $a^{[l]}$ and is of dimensions $n_Htimes n_wtimes n_c$

Content cost function The content cost function $J_{textrm{content}}(C,G)$ is used to determine how the generated image $G$ differs from the original content image $C$. It is defined as follows:

Style matrix The style matrix $G^{[l]}$ of a given layer $l$ is a Gram matrix where each of its elements $G_{kk'}^{[l]}$ quantifies how correlated the channels $k$ and $k'$ are. It is defined with respect to activations $a^{[l]}$ as follows:

Remark: the style matrix for the style image and the generated image are noted $G^{[l](S)}$ and $G^{[l](G)}$ respectively.

Style cost function The style cost function $J_{textrm{style}}(S,G)$ is used to determine how the generated image $G$ differs from the style $S$. It is defined as follows:

Overall cost function The overall cost function is defined as being a combination of the content and style cost functions, weighted by parameters $alpha,beta$, as follows:

Remark: a higher value of $alpha$ will make the model care more about the content while a higher value of $beta$ will make it care more about the style.

Architectures using computational tricks

Generative Adversarial Network Generative adversarial networks, also known as GANs, are composed of a generative and a discriminative model, where the generative model aims at generating the most truthful output that will be fed into the discriminative which aims at differentiating the generated and true image.

Remark: use cases using variants of GANs include text to image, music generation and synthesis.

ResNet The Residual Network architecture (also called ResNet) uses residual blocks with a high number of layers meant to decrease the training error. The residual block has the following characterizing equation:

Inception Network This architecture uses inception modules and aims at giving a try at different convolutions in order to increase its performance through features diversification. In particular, it uses the $1times1$ convolution trick to limit the computational burden.

Comments are closed.